There are many predictions as to how technology, more specifically automation, will alter our day-to-day life. Creative arts are more often than not left out of those, as the common sentiment is, that you can’t synthesize creativity. But what if we drew the creativity, at least in part, from the beholder rather than the producer?

It has been years since the advancements of artificial intelligence have made it’s way into music. There is software that will generate you a soundtrack for your indie short film or even finish Beethoven unfinished symphony. Truly remarkable technology. A maybe less impressive, but for me even more interesting software is Stratus. Stratus was developed by Ólafur Arnalds and Halldor Eldjarn that, upon playing a chord, will respond with a seemingly ‘random cloud’ of notes based on that chord. Ever since hearing about Stratus and then later the concept of aleatoric music (explained further below), I have had this one question in my mind: At some point in the future, can I generate music based on a single situation I am living in?

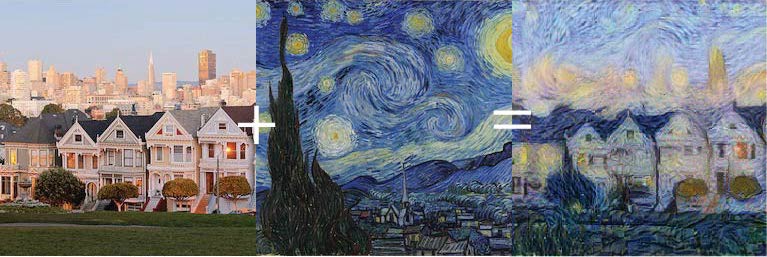

Let’s get futuristic. Imagine having a device that translates your brainwaves and senses into harmonies that play in the rhythm of your heart beat. It gets quiet when you enter a dark room, dissonant when you are annoyed and fast paced when you are working out. Imagine the infinite possibilities to generate music just based on your experiences! First thing I would do is probably checking out what some famous art pieces, like Starry Night, would sound like. A style transfer from painted art to music. There actually already exist artificial neural networks that will perform a style transfer from one picture to another. Same goes for music, although less successful.

source: https://medium.com/@build_it_for_fun/neural-style-transfer-with-swift-for-tensorflow-b8544105b854

And if you have ever used the classic Windows Media Player on Windows XP to play your brand new Coldplay CD ‘Parachutes’ , you’ll know there is also software that will translate your music into visuals. Those visuals get pretty boring after a while though, and don’t really adequately represent the musical piece. The style of music seems to be just that much harder to grasp and quantize. But it’s not because people have not tried.

When you’re looking for innovations in music theory you will pretty much always end up with concepts of Neue Musik. It is a collective term for 20th century conceptual music that aims to change and extend our means and understanding of music in terms of tonality, harmony and rhythm. One of those concepts is Serialism. This composition technique is an attempt at quantizing every aspect of music to form series, and using these series (more or less rigidly) to compose your piece. Now, serial music pieces will often sound pretty strange to most people and it wasn’t created with the purpose to generate music, but nevertheless it provides a set of tools you could use to quantize music composing. Neat!

Let’s move away from obstacle of generating music to look at what I mean with ‘Music of the moment’. About a year ago I started listening to the music of Terry Riley. I actually documented it in this blog post (which is exactly one of the reasons why I am doing this blog). His arguably most famous composition is the minimalist piece ‘In C’. Performances of ‘In C’ range from as short as 15 minutes up to over an hour with the number of performing musicians varying greatly as well. That is because the course of ‘In C’ is very much dependent on the performing artists. The piece consists of 53 musical phrases which are basically worked off by every musician. While the content of those phrases are predetermined, every musician can decide for themselves when they move over to the next phrase. This gives ‘In C’ it’s aleatoric element. The term aleatoric music was coined by Werner Meyer-Eppler who wrote “A signal is aleatoric, if it’s process is roughly determined […] but depends on chance in detail“. In a way, you could say that aleatoric music is similar to improvised music. Or maybe even that improvised music is a loose subset of aleatoric music? Anyways, I digress. Of course, a musician deciding to move on to the next phrase is not technically random. The decision is guided by many physical and mental influences, too many to grasp, so it might as well be chance. The idea of music being mostly guided by the influences of the moment is fascinating to me.

I think this concept might be the key to making generated music of the moment a reality. You would have an artist engineering certain rules for every aspect of music, similar to how Riley wrote those phrases, combined with serialism concepts. These rules would not only apply for the basic attributes, like tonality, harmony, rhythm, timbre and dynamics, but also for higher order concepts, which would ultimately determine some kind of genre. It’s a probably huge process to merely provide a canvas and a set of colors for your individual senses, biosignals and physical surroundings to draw on.

Circling back to Stratus, you might now understand why I think that this piece of software is a step to the right direction. Whatever note you put in, Stratus responds with complex, but fitting music. Which transforms every input of yours into a collaborative piece of art by yourself and the artists that created Stratus.

It only seems fitting to close this post with the song momentary off of Arnalds’ album re:member, in which Stratus played an integral part (I don’t know if for this particular song though) in it’s creative process.

Let me know about your thoughts and ideas about this topic!

Nice one Joja! It makes me wonder how tools like these will elevate music in the near future. Like a high-level jazz musician adopting or being taught with this in their arsenal already might form new and untold connections in their own brain with highly interesting results. There will always be the low-effort stuff that comes out that isn’t great , but I think this type of thing has huge potential when used by super musical artists.

Also I would like to hear what Viktor could make for us based on his situation. 10/10 would listen.

Yes! I’m also thinking of enhancing your live experience with stuff like that. With the insane productions of records these days, it may disappoint audiences when creators don’t really reach that same level live. But that’s often different with jazz gigs where they just play completely different versions of their recorded tracks, which is just that more satisfying. Last year I saw Nubya Garcia absolutely killing ‘Lost Kingdom’, using it to travel through different modes, dynamics, rhythms and styles, stretching it to a 25 minute masterpiece. I’d like to see technology enabling musicians to add this kind of novelty and variety to each of their shows. People already use and develop new interactive lighting techniques, loopers and a big range of audio effects and gimmicks to elevate the live experience, so there’s definitely a trend.